Using the alert(1) XSS payload doesn't actually tell you where the payload is executed. Choosing alert(document.domain) and alert(window.origin) instead tells you about where the code is being run, helping you determine whether you have a bug you can submit.

The Video

Introduction

Cross-site scripting, also known as XSS, is a type of security vulnerability involving the injection of malicious script into normal and safe websites. This injection is designed to affect other users of the website. Injecting an XSS payload containing alert(1) allows a window to pop-up as a result of the payload being executed. The window popping up is evidence that the payload was run. Therefore, based on where the code was run, there may be potential for injecting malicious code. This is also a kind of vulnerability that is reported via bug bounties!

The alert(1) XSS Payload

The first clear advantage of using the alert(1) XSS payload is that it is very visual. You can inject the code and see very clearly when it gets executed which is convenient for webpages with lots of inputs. By varying the argument of the JavaScript alert() function, you can quickly locate where the XSS injection has worked.

alert(1) and praying it works.There is a second upside to using alert(1), and it is that there are some client-side browser JavaScript frameworks with templating that allow some limited form of JavaScript, like printing scope variables or doing relatively basic math. Due to the limitation imposed by the frameworks, you cannot actually inject malicious code, but you can use window.alert(1), since the window object is necessary for webpage functionality. The very same window object also holds the information that an attacker would be the most interested in, such as window.localStorage or window.document.cookie. In this case, successfully executing the alert() can be an indication that your XSS finding has a high severity, and should be reported.

These limited JavaScript templates however are no longer used as often as they used to be; over time it became clear that it was becoming too difficult to use, with many resorting to fixes and bypasses to circumvent the limitations. There's some information on AngularJS sandboxing attempts if you are further interested in the circumventing we just mentioned, available here. We also have a short playlist covering this topic, available here.

Even though it seems on the surface like a great injection metric, since it shows you if your XSS injection is critically exploitable, this is not the case. Having a window pop up is not necessarily the proof that there is a security vulnerability, in fact. Let's have a look at why this is the case using Google's own Blogger service.

Just a quick note, keep in mind the bug bounty scope may change between the time when we recorded the video and wrote this post, and the the time when you are reading this. At the time of recording the video and writing the blog post, the services in scope include:

*.google.com*.youtube.com*.blogger.com

For our example, all subdomains of blogger.com are in scope, which is exactly what we need.

XSS with Googler Blogger

If you've started using Blogger and taken the time to explore the features, you might have noticed that you can inject some HTML and JavaScript. To do so, create a new blog post and head over to the Layout menu on the left sidebar. There, click on Add Gadget and then on HTML/JavaScript. As the name implies, it allows you to inject a script with an alert(1), like so:

<script>alert(1)</script>alert() function call.

Now, we don't know where this script actually gets executed, so let's just keep using the Blogger platform to finish our blog post. We type up a well-know snippet of text, and hit the Preview button on the top-right of the blog post page to see what we've come up with so far.

And look who's here: the alert(1) XSS payload trigger! Check out the browser address bar: the URL reads https://www.blogger.com/blog/post/edit/preview/..., so the site is in scope and this means have found a bug, right?

alert(1) in all of its glory.Not quite, unfortunately. Let's examine why. By changing alert(1) to alert(document.domain) in our code, we have a payload that will tell us what domain we're actually injecting the code into. In our case, it's usersubdomain.blogspot.com, and not blogger.com. The reason for this becomes clear if you use the developer tools to look at the webpage code. You'll see that the blogger.com webpage embeds the usersubdomain.blogspot.com site in an iframe, and the payload is sent to the latter domain, which explains why the trigger didn't output blogger.com but instead usersubdomain.blogspot.com.

An important question we asked ourselves at this point is: why would Google use two different domains to implement the blogger service? Well, XSS is the reason. To protect themselves and their users, they use sandboxes, as mentioned here.

Sandboxes

Google specifically stipulates that they use

a range of sandbox domains to safely host various types of user-generated content. Many of these sandboxes are specifically meant to isolate user-uploaded HTML, JavaScript, or Flash applets and make sure that they can't access any user data.

So what's important about all of this? The point of an XSS attack is to access data that you supposedly aren't allowed to access. Take for example another user's cookies; these will be on the blogger.com domain, so an XSS attack used from the usersubdomain.blogger.com website cannot access the cookies, due to the same-origin policy. The same-origin policy ensures that a script contained in a first webpage can only access a second webpage if the pages have the same origin. In our case, we have our blog in our sandbox and its script, but it cannot access anybody else's sandbox, since these webpages do not have the same origin.

usersubdomain.blogspot.com cannot reach cookies on blogger.com.This is the reason behind why we want to use alert(document.domain) or alert(window.origin) payloads; doing so tells us exactly on which domain the XSS is getting executed, which really is the domain that we can access. In this case here, it's usersubdomain.blogger.com.

To summarize, Google lets users add custom HTML - and thus JavaScript - functionality to their blogs so that users have a chance to further customize the content. It's a great feature! To ensure that this feature cannot be used to attack other blog(ger)s with XSS injections, they placed each user's data in its sandboxed environment and then embedded it into the blogger.com domain using an iframe. So, when using an XSS payload, use alert(document.domain) or alert(window.origin) so you can be sure about what domain or subdomain the XSS is getting executed on. This is a deciding factor to establish whether you've found an actual security issue, or a dud.

Sandboxed iframes

Apart from sandboxing domains, it is also possible to sandbox iframes. We've actually discussed some of it before in a previous video (here); there, Google implemented a JSONP sandbox, where they injected an iframe with a user-controlled XSS payload, but also set the sandbox attribute on the iframe. Why? Let's have a look!

iframe sandbox.We implemented a simple tool that allows us to execute JavaScript expressions via eval.

function unsafe(t) {

document.getElementById("result").innerText = eval(t);

}For instance, we type 1+2 in the expression box, and the result returned is 3 (no surprises here!).

We've also implemented a secret session token,

document.secret = "SESSION_TOKEN";which we can steal by injecting alert(document.secret), as the resulting window pop-up reads SESSION_TOKEN, demonstrating the success of the method.

Let's now modify the script a little to have the script execute within an iframe. We write the new unsafe function as

function unsafe(t) {

var i = document.getElementById('result'); // get the <iframe>

i.srcdoc = "<body><script>document.write("+t+");<"+"/script></body>;

}Note that the iframe is given the sandbox attribute:

<iframe id="result" sandbox="allow-scripts allow-modals"></iframe>Our previous example of summing 1 and 2 still works.

1+2 to demonstrate that the functionality is still here.If we execute alert(1), we get our pop-up window with the result 1, which demonstrates that this code is as vulnerable as the previous example, right?

alert(1) works!To find out, we try to get the secret session token with alert(document.session).

document.session with an XSS payload.It doesn't work! Let's see what the code yields if we input alert(window.origin) or alert(document.domain). Both return an empty result! Why is this?

alert(window.origin) returns a null and alert(document.domain) returns nothing!This is different from the sandboxed subdomains we saw in the Google Blogger example, but there are parallels. Just like the subdomains with the blogging platform, here the iframes are also isolated from the website they are embedded into, so you cannot access the secret session token.

The result of this experiment reinforces the value of using alert(document.domain) and alert(window.origin): they are extremely helpful in determining whether you have a valid security issue to submit as a bug bounty.

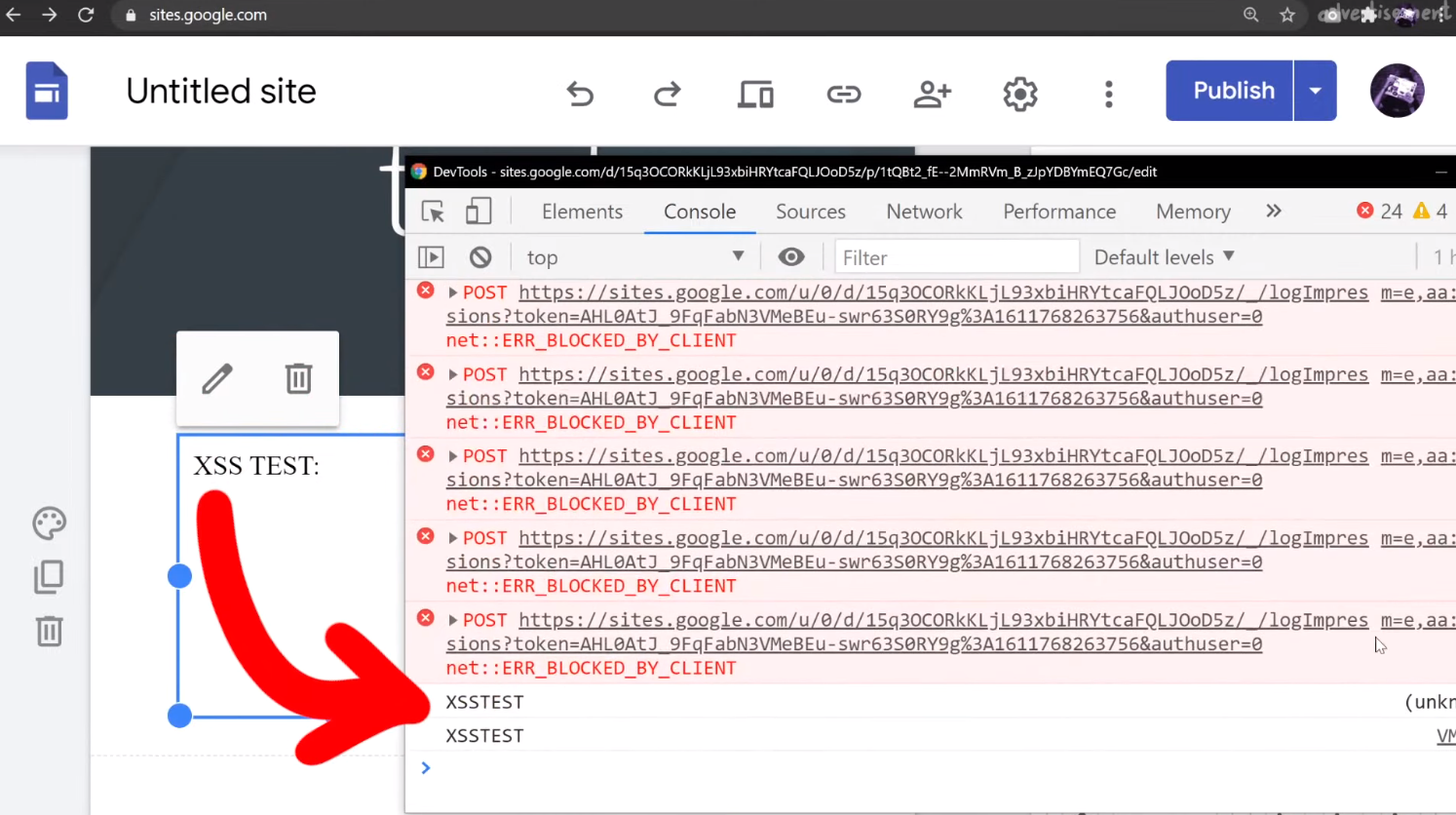

Console Logs

When you find an injection into a sandboxed iframe, typically the allow-modals option in the sandbox attribute is not enabled. For instance, with sites.google.com, we can create our own webpage. Let's use it to embed some raw HTML and JavaScript!

XSS TEST:

<script>alert(1)</script>However, when we inject this payload, nothing happens.

We can further investigate this if we use the console log using the browser's developer tools. You might notice in the log that the alert(1) is blocked by a sandboxed iframe. Remember to use filtering if you can't find it in the log.

alert().In this case, it's actually better to use

XSS TEST:

<script>console.log("XSSTEST")</script>to see where, or even whether, your XSS payload is being executed. You'll find that in the console log, there will be the word XSSTEST, or whatever you might specify when you try. Clearly, the payload was executed. Is this a bug?

XSSTEST showing up in the console log is evidence that the payload was executed.Again, this unfortunately is not a vulnerability. Let's look at the actual execution context by looking at (you guessed it) alert(document.domain) output. If you do that, or modify your script to read

XSS TEST:

<script>console.log("XSSTEST"+window.origin)</script>then you'll see that we are once more using a sandboxed domain, and that's exactly where the payload is being executed.

Closing Remarks

Throughout this article, we showed you that using alert(document.domain) and alert(window.origin) would tell you what domain or iframe your XSS payload is being executed in. In each example, we saw that the payloads were executed in isolated, sandboxed environments. This meant that none could access the object of interest, whether a secret session token, or another user's information.

So, why should you still investigate XSS injections in a sandboxed iframe or subdomain? It doesn't qualify for a bounty, so where is the incentive?

Take for example a website with an embedded (and sandboxed) JSONP iframe. The website typically will communicate with the iframe using a postMessage, and so there could be a way to exploit the messaging system between the website and the iframe to have the XSS payload be pushed to the website and executed there.

This is basically a sandbox escape, though the vulnerability is not with the first XSS in the sandbox, but rather the ability to escape and trigger the XSS on the website.

In summary, Google allows XSS by design in sandboxed subdomains, and a simple alert is not enough to prove that you have uncovered a serious XSS issue. You should always check what domain the XSS is executed on by using the alert(document.domain) or alert(window.origin) payloads. Hopefully you can appreciate the value of sandboxing domains and iframe environments, at least from a defense standpoint. Don't be discouraged though! Having an alert(1) execute could be the start of uncovering something bigger, so keep at it and take notes! We just want you to understand the broader context so that you can better investigate and see if you can find a bug that can get you a bounty. Good luck hunting!